I recently read Daniel Kahneman book Noise, as he clarified for me limitations about applicant process interview that I've observed. The residency interview process (or in actuality, most professional job interview process) is subject to so much variability, and depending on the interviewer, applicant, and various uncontrolled conditions, the applicants can do well or not so well. Having experienced a couple of the resident applicant interview cycles, I realized how flawed it was. I felt validated when Kahneman went into depths in his book about exactly the flaws that existed in human judgement that contributed a lot of randomness into the interview process. He then discusses Google's approach to interviewing applicants, which completely resonated with me. What I learned were the following:

- Only up to 4 interviewers needed. Anymore beyond 4 interviewers is minimal added value.

- Use structured interview questions, specifically behavior questions, rather than questions about past projects, items on CV. Tailor questions for what the applicant can become (rather than what the applicant currently is capable of).

- Criteria should be judged independently. At Google, it sounds like the interviewees do video interviews, and response to each question is judged separately / independently, and then an aggregate summary is used.

- Use ranking instead of score based review. If there are 10 applicants, then the interviewers should rank them 1 to 10, instead of giving each interviewees scores per criterion. Humans are better at ranking than scoring.

- Use aggregate scoring (only after independent review).

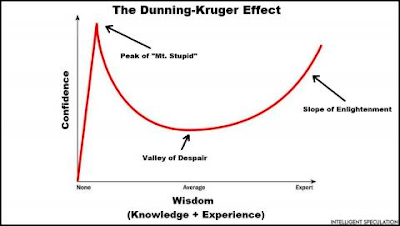

The process that some of my colleagues in other fields deploy is asking applicants to rate themselves on proficiency is various aspects of the jobs, and then testing them. For example, for a business analytics position, the applicant may be asked to score their proficiency on SQL, Tableau, Power BI, and other tools. Then the applicant is provided the software, and then asked to create / write a few codes. This approach tests both the applicants' self-reported humility, and then competence.

Sometimes I play a thought exercise and wonder what I would do if I could design a residency applicant interview process, and I would probably do something along these lines:

1. Train and validate an AI model to work alongside to sift through hundreds of applicants and compare /contrast my findings with AI, so that we are reviewing ALL the applicants that would be good fit for the program. The AI would also surface known biases in the program for program's awareness. Often times, we can superficial and shallow filters which can overlook individuals with very high potentials.

2. For the interview process, I like Google's approach and would probably pilot a variant using panel interviews with standardized behavioral questions, with maximum of 4 interviewers.

3. I would ask interviewers to rank (not score applicants) on key criterion, and then aggregate results to generate final rank list.

4. If it was doable / feasible/ permitted, I would ask all interviewees to report their proficiency on key diagnostic radiologists' skills, and then have them generate mock reports of a few radiology studies, and review the reports.

5. After trainees match and have gone through the early experiences, I would regroup with the interviewers on the outcomes and learn from our strengths and weakness, and make changes accordingly to improve the process, and keep iterating.

6. At some point in the future, I would train/validate an AI model to read facial expressions (according to Paul Eckmann's line of research) to predict outcomes (e.g. good fit, eventual competence, etc) and use AI to work alongside interview committee members to provide comparative information / data.

No comments:

Post a Comment